Imagen/DALL-E 2 (Image Generation AI)

The Spark Selects series features a monthly article from one of our team members.

To nurture creativity in Spark, we encourage our employees to discuss their desired topics and interests. The views expressed here are strictly those of the writers and not of Spark. If you are interested in our B2B content, head to our Spark's Voice Blog, and if you are a startup founder looking for some inspirational and educational reading, check out our Startup Series.

Among many of artificial intelligence's (AI) fascinating capabilities, today is the ability to generate images based on textual descriptions. AI can now take a couple of sentences and create a corresponding image, often with great accuracy. This is known as Image Generation AI.

With the recent buzz surrounding OpenAI’s DALL-E 2 and Google’s Imagen, it’s time to dive deeper and understand how this technology works and where we are with it today.

What is Image Generation AI?

Image Generation AI is the process of creating a visual representation of something from textual descriptions. You can do this through natural language processing, computer vision, and machine learning algorithms. For example, given the text "a black cat on a sunny day," the AI might generate an image of a black cat sitting in a sunbeam.

How does Image Generation AI work?

To generate images, Image Generation AI first needs to understand the text it is given. This is done with natural language processing, a branch of AI that deals with understanding human language. Once the AI understands the meaning of the text, it can then begin to generate an image.

Creating the image is done with computer vision and machine learning algorithms. Computer vision is the AI's ability to interpret and understand digital images. This is usually done by first breaking down the image into pixels and then analysing each individually.

Machine learning algorithms are used to create the final image. These algorithms take the data from the computer vision analysis and generate an image that looks similar to the description.

What are the potential applications for Image Generation AI?

The applications of this technology are vast. With Image Generation AI, we can generate realistic images of people, places, things that don’t exist yet, and even imaginary creatures. This can speed up the pipeline in several types of businesses.

Here are just a few examples of industries where image-generation AI can be applied:

1. Design and Fashion

Imagine being able to generate images of clothing items or home decor before they’re produced. This would allow for better design decisions to be made as well as faster turnaround times for new products.

2. Art

Artists can now use AI to create realistic images of their subjects or even completely new and imaginary ones. This opens up a whole new world of possibilities for what art can be.

3. Advertising and Marketing

Advertisers and marketers can use image generation AI to create realistic renders of products or services. This would allow for more accurate and persuasive advertising.

4. Gaming

The gaming industry can use image generation AI to create realistic character models or environments. This would result in more immersive and lifelike games.

Advancements in Image Generation AI

OpenAI and Google are two of the companies leading the text-to-image AI revolution. However, while information about the developments has been shared, the technology can only be accessed by a select few.

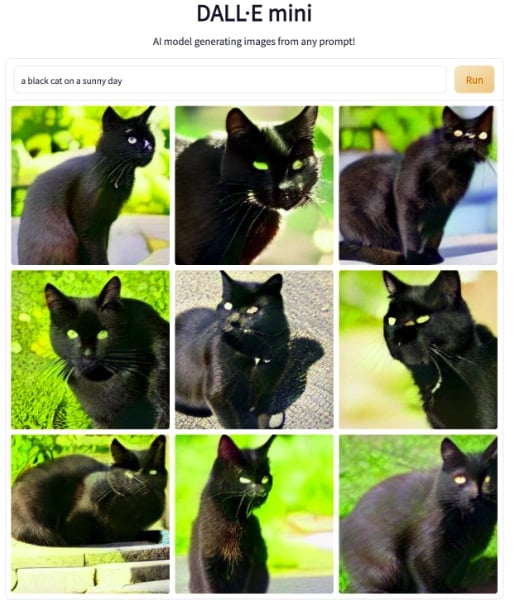

One Image Generation AI that the public can access is DALL-E Mini, an app that produces nine images on a 3x3 grid based on a user’s text prompt. DALL-E Mini’s outputs have become a social media phenomenon, with users creating memes out of the most absurd text descriptions.

Regardless of the results being a hit or miss, DALL-E Mini does give us a tangible grasp of Image Generation AI’s massive potential. While the world anticipates the public launch of DALL-E 2 and Imagen, let’s review the features of the two.

OpenAI’s DALL-E 2

One of the most impressive examples of Image Generation AI to date is OpenAI’s DALL-E 2. This system is a highly improved version of the original DALL-E. Unlike its predecessor, which often generated blurry and inconsistent images, DALL-E 2 can create accurate, high-resolution images at lower latencies. Users also have the option of applying different art techniques and styles.

Another feature DALL-E 2 boasts of is “inpainting,” or the ability to take an existing picture and add more elements to it. What is remarkable here is that the AI also considers shadows, reflection, and textures so that the new elements would seem like a natural fit.

Google’s Imagen

Google’s Imagen was announced just a month after DALL-E 2. It is described as a text-to-image diffusion model “with an unprecedented degree of photorealism and a deep level of language understanding.” Compared with DALL-E 2’s images, Imagen seems more focused on photorealism.

Imagen was created using numerous web-scraped datasets, which Google has warned can reflect biased and oppressive views. This is why the company has not made the AI model publicly accessible yet.

The possibilities are endless for AI, and image generation is just one of them. As researchers and developers work to better these technologies and remove the problems of cultural biases, Image Generation AI will become more and more commonplace in our everyday lives.