The Testing Pyramid

At Spark, we are always customer-centric. Testing by our QA team and developer teams is part of making it happen. So how do we ensure our customers' cloud-based solutions are tested at the correct levels?

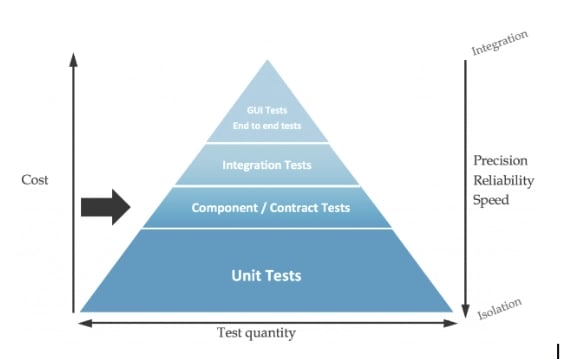

We use the Test Pyramid (TP for short!) to make that decision. If you have yet to hear about it, the Test Pyramid is a metaphor that helps organise the testing by grouping them at different granularity levels. This also provides a visual representation of the number of tests used at each level.

The TP representation is organised using criteria of speed and cost. It's generally structured in 3-4 levels (manual, end-to-end, integration, and UI tests).

At the top of the TP, we place the most expensive (and slower) tests and run them at a lower number. This level it's usually taken by manual, end-to-end tests, and UI tests…

We place integration or service tests at the middle level, which has a medium speed and cost compared to the top and bottom layers.

At the bottom, we place unit tests, covering which are faster and with the lowest cost compared to the rest.

So how did we adapt this approach to our needs?

Different projects require different approaches, but we have the mindset of automation first, so we keep the total number of manual tests required as low as possible. Following cost principles, we analyse the necessary end-to-end tests and keep a minimal volume. There are more significant integration tests than other non-cloud-based architectures. This need comes from using a wide variety of AWS components that interact among them. It is also driven to check if these groups of features and services interact as expected. At the bottom, we keep a reasonable volume of unit tests. This follows best practices and verifies if they behave as if they were designed at a lower level.

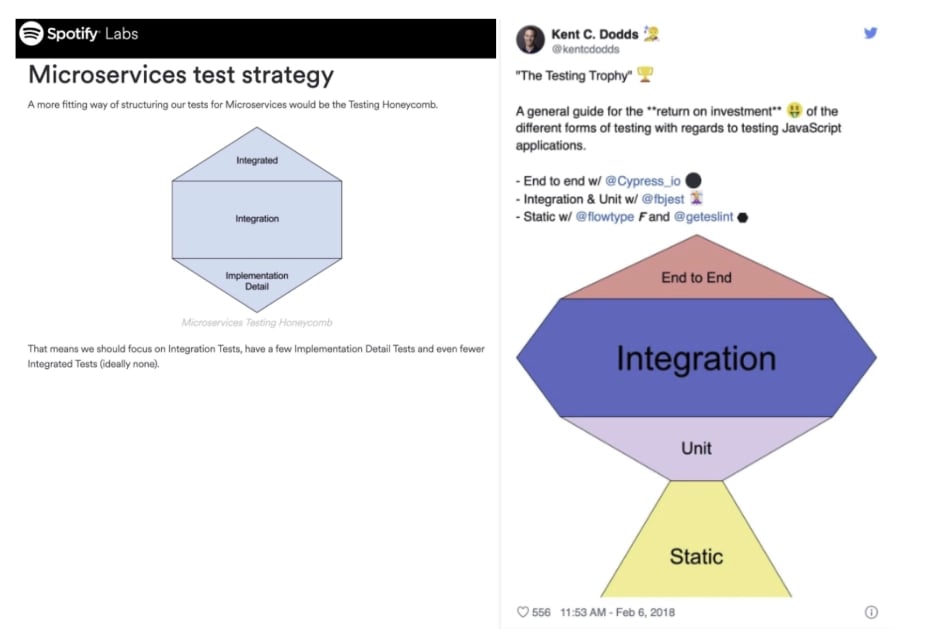

Finding the right distribution of tests in levels, might vary from one project to another, resulting in the TP shape. Some applied approaches are closer to Spotify Honeycomb or the proposed Testing Trophy by Kent C. Dodds. Due as heavily using AWS services it tend to be more effective setting the focus on integration tests.

What are some of the tools and approaches we use along the Test Pyramid? (Just to name a few)

Usually, there are no best programming language or tool (although we are big fans of Python and Javascript/Typescript). The needs typically depend on the project, and the cloud is a fast-paced environment with diverse technologies. Rather than define a fixed set of tools to be used, we are always open to exploring what can best suit our customer's needs; for that, we usually take into consideration using:

- Agile methodologies

- Have in mind the Manifesto for Agile Software Development

- Behaviour Driven Development (BDD)

- Understanding specs and having a common language through Gherkin

- Mocking and stubbing

- Simulate objects or functionality for testing

- Continuous Integration / Continuous Deployment

- Setting automated processes to run the tests defined in the pyramid

- Coverage report tools

- Get an understanding of how well we are checking the functionality concerning the code tested

Conclusion

Although this article did not expand on many related topics to keep it short, we wanted to briefly introduce the effort distribution and familiar takes over the TP balance.

We keep it slim at the top with a reduced manual and balanced volume of UI and end-to-end tests. It looks a little more "chunky" at integration and service tests, as deploying and building infrastructures and communication is vital in the cloud. Finally, we need to be flexible over the base of needed unit tests.

I hope this article teaches you something new or at least helps to open your mind for experimenting with different shapes of the TP or approaches to use, such as the testing honeycomb or testing trophy, which place the balance in various forms.